NMFMatch

Sound matching using real-time non-negative matrix factorisation

NMFMatch is designed to work with bases taken from a BufNMF analysis. Please visit the BufNMF Overview to learn about bases before proceeding.

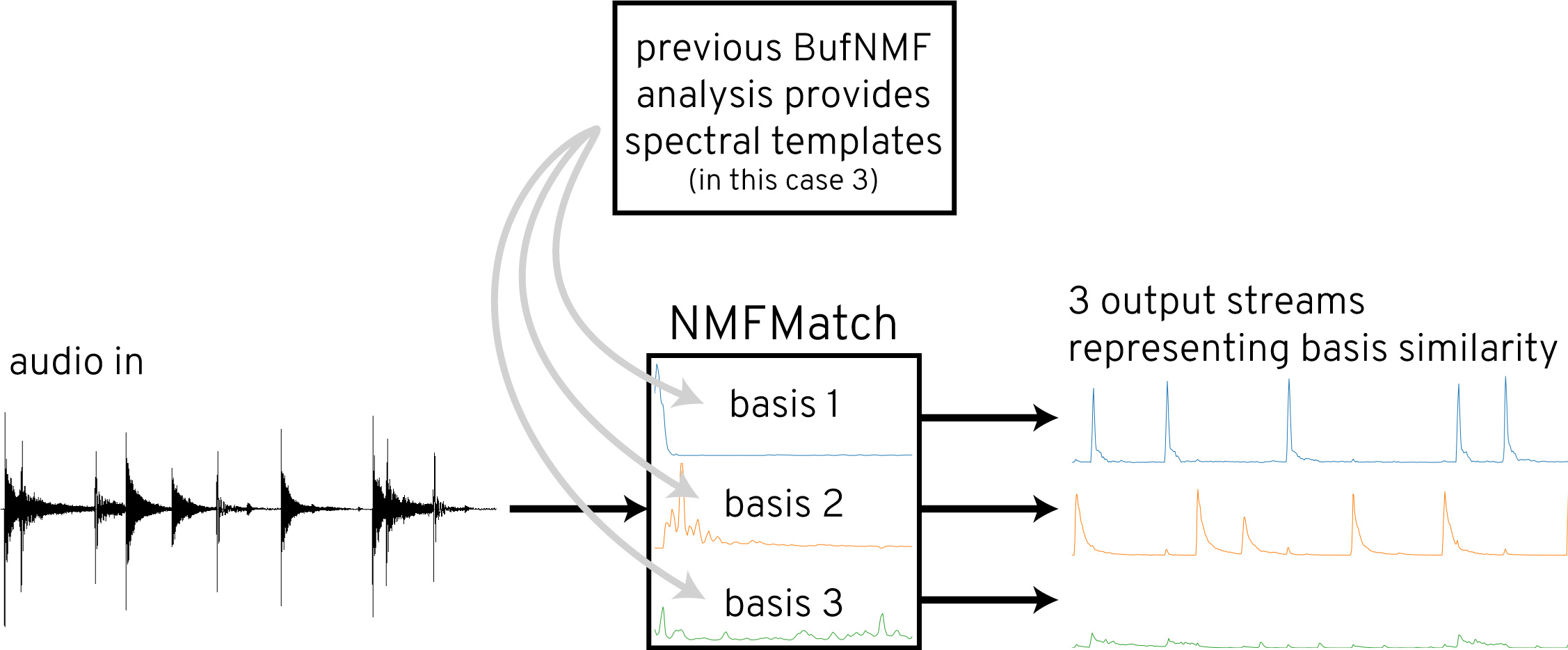

NMFMatch compares the spectrum of an incoming audio signal against a set of spectral templates provided by the user (such as bases from a BufNMF analysis). It continuously outputs values indicating how similar the spectrum of the incoming signal is to each of the provided bases (0 = more dissimilar; higher values = more similar). NMFMatch can be used as the foundation of a classifier to estimate when certain sounds are present.

NMFMatch compares real-time audio against NMF analysis bases and outputs values indicating how similar the incoming signal is to the spectral templates provided.

The bases from the BufNMF Overview example contain spectral templates for the (1) kick drum, (2) snare drum, and (3) high-hat components of the original drum loop. One can use these bases in NMFMatch to determine how similar an audio signal is to each of these spectral templates at any given point in time.

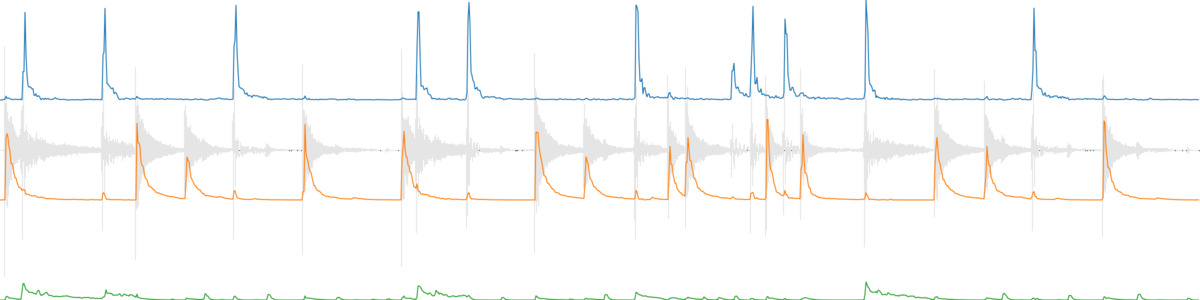

First, let’s provide these bases and send the original drum loop through NMFMatch (the plot below shows the recorded real-time output values of NMFMatch). This shows how similar the audio signal of the original drum loop is to each of these spectral templates at each moment in time.

SuperCollider example code for this overview Max example code for this overview

Recorded output values of NMFMatch when the original drum loop is sent through it using the bases derived from a BufNMF analysis of this drum loop. (blue = kick drum, orange = snare drum, green = hi-hat)

It makes sense that these similarity curves look like the activations from the original BufNMF analysis. Just like the activations, they indicate how present each spectral template is at a given moment in time. Since each spectral template corresponds to a different drum instrument (in this case) these look like amplitude curves of the different drum instruments.

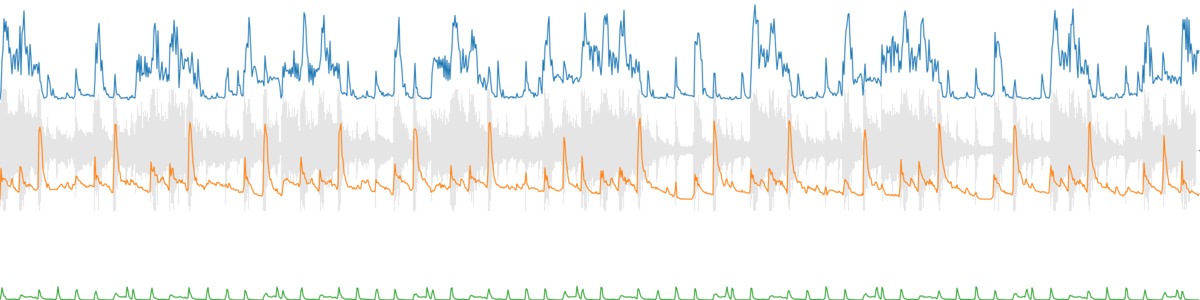

Next, let’s provide the same bases (from the drum loop) but send a different audio signal into NMFMatch. Here we’ll use an excerpt from an ensemble recording. (The plot below again shows the recorded output values of NMFMatch.)

Recorded output values of NMFMatch when the this song is sent through it using the bases derived from a BufNMF analysis of the drum loop. (blue = output based on the 'kick drum' basis, orange = output based on the 'snare drum' basis, green = output based on the 'hi-hat' basis)

Again, one sees what looks like activations for each of the drum loop’s bases. To get a sense of what these curves represent, one can use NMFFilter to hear what these three components contain.

NMFFilter has relatively well decomposed the kick drum, snare drum, and hi-hat sounds from this song (because those are the bases from the drum loop!). Of course there are other sounds in each of these components as well, as all of the sound from the song recording needs to be represented by these components. One could consider the output of NMFMatch to roughly represent the presence of a hi-hat, kick drum, or snare drum in this song.

Training on a Subset of Audio

This field recording contains various moments of a dog barking.

Because this recording is only 22 seconds long, one could use BufNMF to decompose the buffer into two or three components and then see if one of those contains just the dog’s barks. The activation of such a component would represent where in the buffer the dog’s barks are present.

If this buffer were much longer (maybe an hours-long field recording) trying to find all the dog barks with just BufNMF would take much too long to compute. In this example we’ll use BufNMF to decompose a section of the field recording (four seconds) to find a basis for the dog’s bark. Then we’ll use NMFMatch to find where in a longer recording that basis (i.e., dog bark) occurs.

First, let’s perform BufNMF on just the first four seconds of this field recording because we know the dog’s bark is represented there. We will specify 2 components, hoping that BufNMF will decompose these four seconds into (1) the dog bark and (2) the “rest”.

BufNMF seems to have done a good job of isolating the dog bark in one component and the “rest” in another.

Activations:

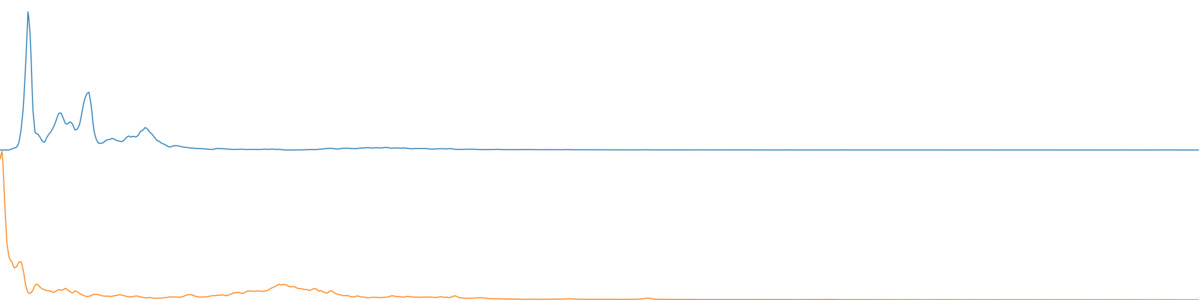

Activations from four second BufNMF analysis.(blue = dog bark, orange = the 'rest')

Bases:

Bases from four second BufNMF analysis. (blue = dog bark, orange = the 'rest')

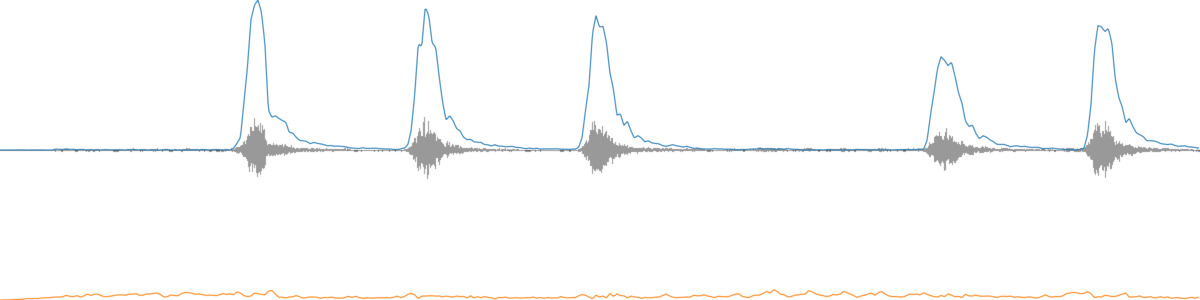

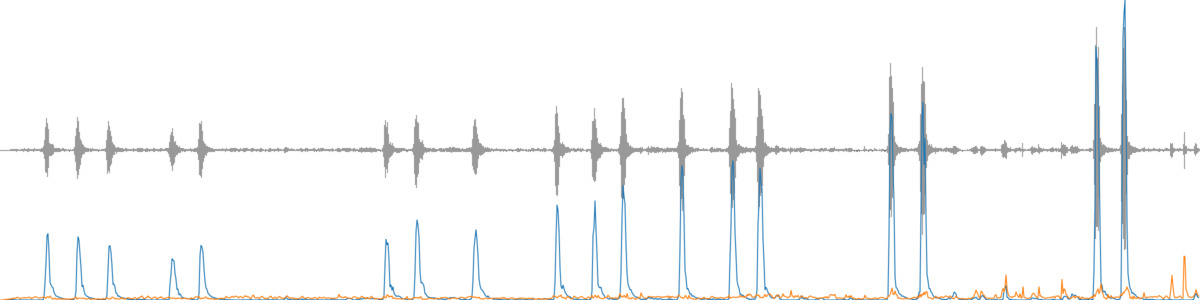

Now we can use these bases with NMFMatch to find where in the whole sound file the dog bark occurs. (The plot below shows the recorded output values of NMFMatch.)

Recorded outputs of NMFMatch. The bases used were derived from the four second analysis conducted above, then the entire 22 second sound file is sent through NMFMatch. (blue = dog bark, orange = the 'rest')